- Next 1%

- Posts

- New ChatGPT model is here: GPT-4o

New ChatGPT model is here: GPT-4o

ALSO: Google launched Project Astra, Veo, and Gemini 1.5 Flash

Welcome Back again, Curious minds!

Today's newsletter is super exciting!

OpenAI and Google, the two big names in AI, just made some huge announcements. They shared cool new models and ideas.

Get ready for some wild updates!

So Today, I only covered the latest announcements from OpenAI and Google such as:

OpenAI launched GPT-4o, a quicker model now free for all ChatGPT users + voice assistant + new vision features, and many more…

Google Yesterday Announced:

Project Astra: Vision for AI assistants

Imagen 3 & Veo: New image and video generation models

Gemini 1.5 Flash: Lightweight multimodal model with long context & 1.5 Pro with 2M tokens

OpenAI launched a new AI model GPT-4o and the desktop version of ChatGPT

On Monday, OpenAI launched its newest, fastest, and most affordable model, GPT-4o.

The new flagship model can reason across audio, vision, and text in real-time.

ChatGPT now comes in two versions: the free ChatGPT 3.5 and the $20/month ChatGPT 4. The paid version uses a bigger language model for better responses.

GPT-4o desires to improve the free version too, making it more conversational.

What's new in this model? According to their Cheif Technology officer

Mira Murati, OpenAI CTO, announced that GPT-4o will soon be available to everyone, including free users. The new features will be released in the coming weeks.

Paid users will get five times more usage than free users.

GPT-4o is twice as fast and costs half as much as GPT-4 Turbo, which was released in late 2023 and offered more current responses and better text understanding.

It will support 50 languages and be available via API for developers to use.

CTO OpenAI Mira Murati / Source: Google images

With GPT-4o, ChatGPT Free users can now:

Enjoy GPT-4 level intelligence

Get answers from the model and the web

Analyze data and create charts

Chat about photos you take

Upload files for help with summaries, writing, or analysis

Explore and use GPTs in the GPT Store

Enhance your experience with Memory

Make your work easier with the new ChatGPT Desktop App:

OpenAi just announced the ChatGPT (GPT-4o) desktop app which can see your screen in real-time.

With the GPT-4o/ChatGPT desktop app, you can have a coding buddy (black circle) that talks to you and sees what you see!

#openai announcements thread!

— Andrew Gao (@itsandrewgao)

5:21 PM • May 13, 2024

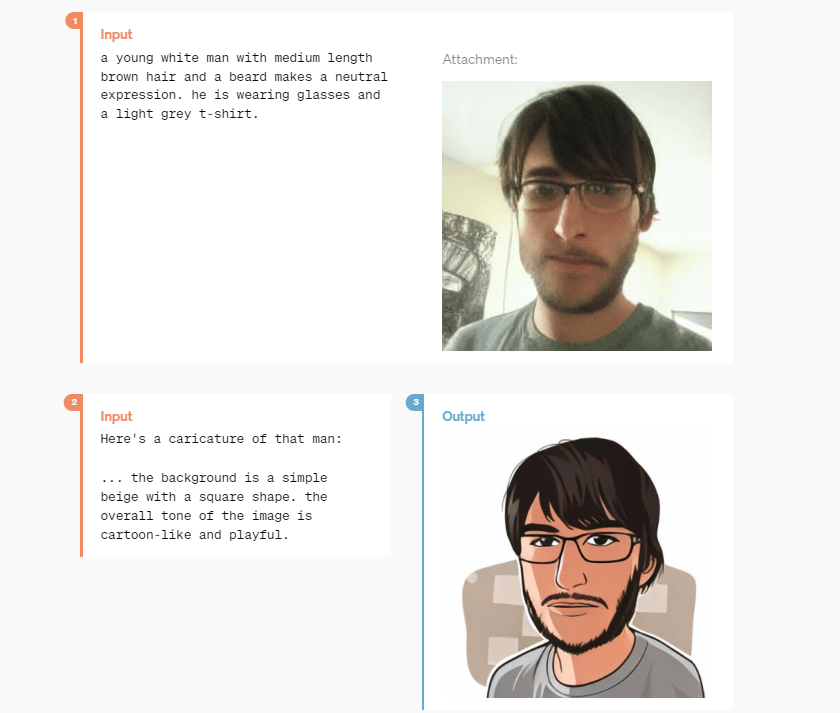

Image Generation with GPT-4o:

GPT-4o boasts powerful image generation capabilities, showcasing one-shot reference-based image generation and precise text descriptions.

This new model promises a more natural interaction by processing inputs and delivering outputs in real-time.

Photo to caricature |  Multiline Rendering - robot texting |

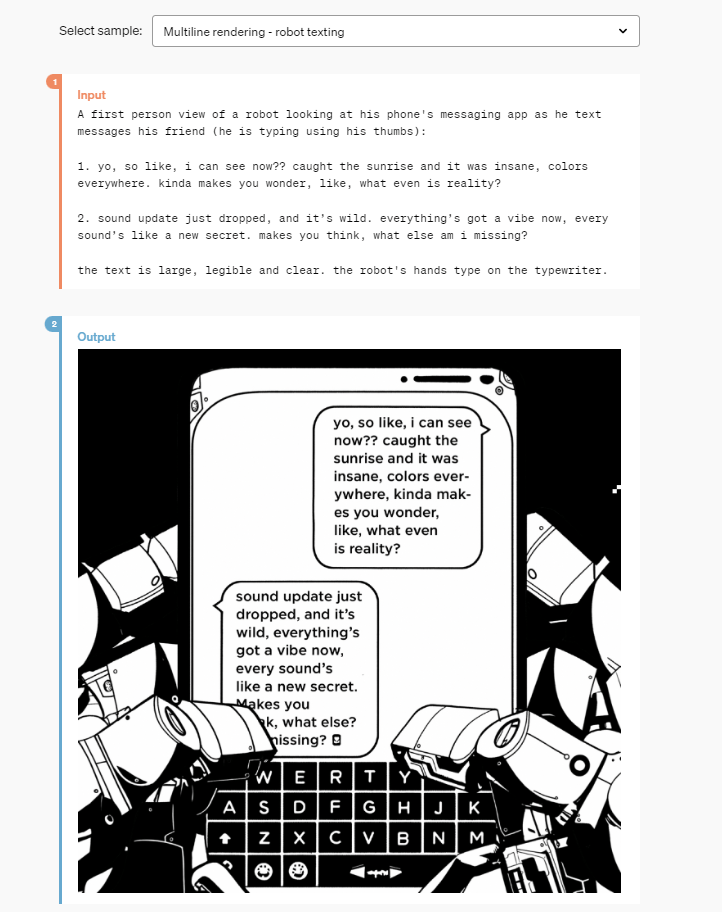

Model Evaluations:

GPT-4o achieves new records in multiple tests:

Text Performance: It achieves a high score of 87.2% on the 5-shot MMLU general knowledge questions.

Image Credit: OpenAI |  Image Credit: OpenAI |

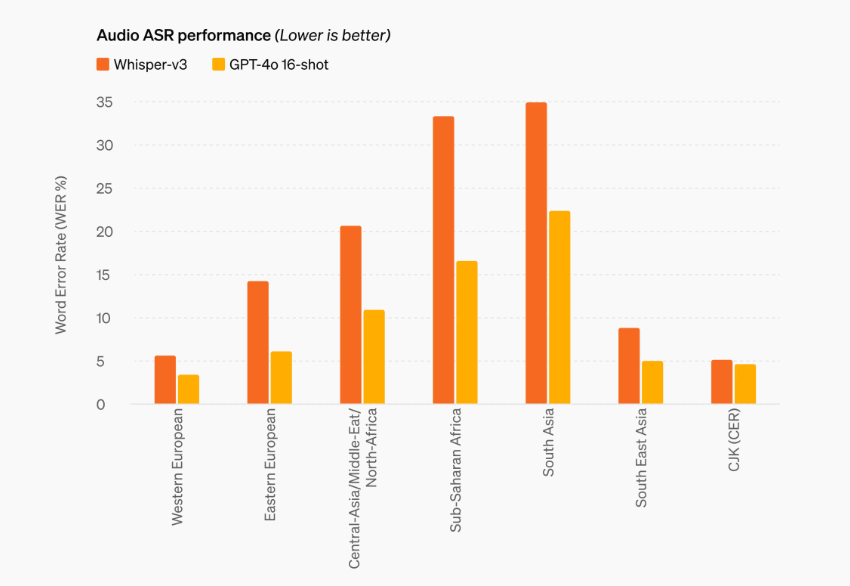

Audio and Vision: GPT-4o outperforms Whisper-v3 in speech recognition and translation and excels in visual perception benchmarks.

Key Features and Capabilities:

Real-Time Processing: GPT-4o processes audio inputs with an impressive response time of as little as 232 milliseconds, averaging around 320 milliseconds, ensuring smoother and more natural interactions.

Enhanced Multimodal Abilities: GPT-4o matches GPT-4 Turbo in text and coding tasks, excels in vision and audio understanding, and improves non-English language performance.

Cost and Speed Efficiency: GPT-4o is faster and 50% cheaper in the API compared to its predecessor, making advanced AI capabilities more accessible.

GPT-4o voice mode is impressive, and OpenAI's mind-blowing demos yesterday truly broke the Internet.

Let me share some demos with you:

Read Full Blog

2. Google just launched its Multimodal AI agent Project Astra + Text to video model and more…

It's Google I/O time now! Sundar Pichai and the team shared lots of new AI features from Mountain View, CA 😁

The event focused on software and AI like Google Gemini and updates for Android. Unlike past years, there were no hardware announcements.

Here are 3 most important announcements:

1. Project Astra

Source: Google images

Google announced Project Astra, an AI assistant that uses your phone's video and voice to give smart answers. In one demo, it helped solve a coding problem and found someone's glasses.

2. Veo, an AI video generation tool alongside Imagen 3

Google announced a new AI model called "Veo" for video creation, designed for users' creative ideas. They are also upgrading their image generation model to Imagen 3.

Source: Google |  Source: Google |

Imagen 3 makes visuals with incredible detail, realistic lighting, and fewer distracting artifacts, from quick sketches to high-res imagery.

3. Gemini 1.5 Flash

Google announced a lightweight multimodal model with long context & 1.5 Pro with 2M tokens.

Source: Google Images |  New Model: Gemini 1.5 Flash |

Gemini 1.5 Pro has a long context window starting at 1 million tokens. Since February, Google has improved its code generation, reasoning, planning, conversation, and understanding of audio and images.

Try it here: Google AI Studio

Gemini Advanced can process up to 1,500 pages or summarize 100 emails. You can upload files from Google Drive or your device for insights. Google ensures your files stay private and aren’t used to train models.

And with that, That’s a Wrap!

Now it's your turn! Go on and build something amazing with the power of AI and open-source models.

Do you have any thoughts on today's topics? Please don't be shy, hit reply, and let's geek out together on 𝕏

Thank you for reading!

Until next time.